Eugene Hwang, Jeongmi Lee, 2021-2022

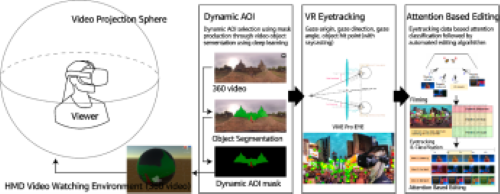

This study tests the method for incorporating attention data collected from 360 video to automatically produce a fully edited lecture video. Due to COVID-19, demand for online video and virtual reality is now high worldwide. In particular, content in the form of lectures, conference presentations, and classes has been completely transformed into non-face-to-face formats. With the increase of video format contents and virtual lectures, the demand for edited video products has increased simultaneously. However, video editing is a labor intensive, time consuming activity. This study seeks to develop an automatic video editing pipeline based on attention information obtained from the viewer’s eye-tracking data in a virtual space and deep learning based AOI segmentation.

Leave a Reply